Classification and Regression – both techniques are part of supervised machine learning. Principally, both of them have one common goal, i.e., to make predictions or take a decision by using past data as underlined foundations. There is one major difference as well: for classification, predictive output is a label, and for regression, it’s a quantity. Generative algorithms can also be used as classifiers. It just so happens that they can do more than categorize the input data. We can call classification as sorting and regression connecting techniques as well.

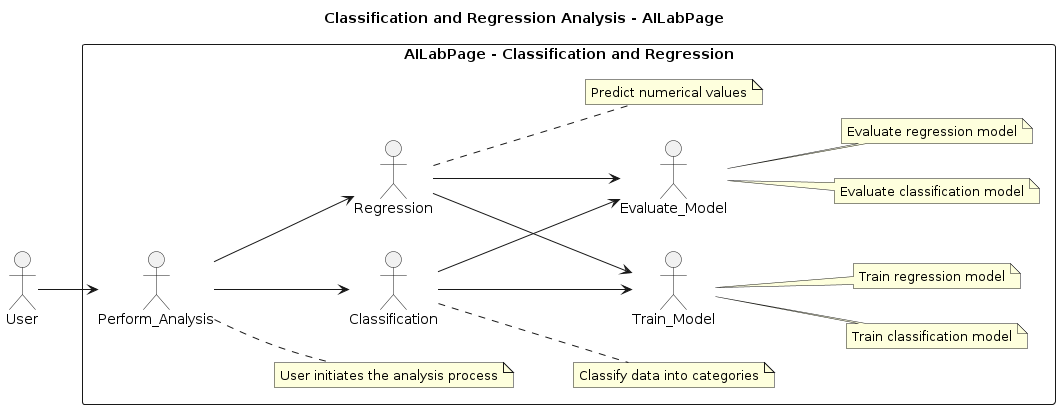

Classification and Regression – AILabPage’s Outlook

Classification and Regression are two essential components of supervised machine learning, a field where algorithms are trained using labeled data to make predictions or decisions. Their shared primary objective is to utilize historical data as the basis for learning and inference.

However, a significant distinction exists between the two techniques: while classification produces predictive output in the form of labels (assigning data points to specific categories or classes), regression generates output in the form of quantities, aiming to establish relationships between variables and predict numerical values.

- Classification, the goal is to assign input data points to predefined categories or classes. The predictive output generated by this technique is in the form of discrete labels, indicating which class each data point belongs to. For instance, it can be used to classify emails as spam or non-spam, or to identify different species of plants based on their features.

- Regression aims to predict continuous numerical values. It establishes relationships between input variables and corresponding output values, allowing the model to make predictions on new data. For example, regression can be used to predict housing prices based on factors such as area, number of rooms, and location.

Additionally, generative algorithms, which can create new data similar to the training data, can also function as classifiers by identifying patterns and features in the input data, going beyond mere categorization. Consequently, classification can be likened to a sorting technique, organizing data into distinct classes, while regression can be seen as a method to connect and predict continuous values based on patterns observed in the training data.

Machine Learning(ML) – Basic Terminologies in Context

Machine Learning – Basics

AILabPage defines machine learning as “A focal point where business, data and experience meets emerging technology and decides to work together“.

ML instructs an algorithm to learn for itself by analyzing data. Algorithms here learn a mapping of input to output, detection of patterns, or reward. The more data it processes, the smarter the algorithm gets.

Thanks to statistics, machine learning became very famous in the 1990s. Machine Learning is about the use and development of fancy learning algorithms. The intersection of computer science and statistics gave birth to probabilistic approaches in AI.

This shifted the field further toward data-driven approaches. Data science is more about the extraction of knowledge (KDD) from data through algorithms to answer a particular question or solve particular problems. You can follow the link below for more details on Machine Learning.

In other words, Machine learning algorithms “learn” from the observations. When exposed to more observations, the algorithm improves its predictive performance.

Classification – Class Of An Object

In classification, predictions are made by classifying output into different categories; in other words, it’s a process of predicting the class of given data points. Classification outputs fall into discrete categories; hence, the algorithms used here are for desired outputs as discrete labels, targets, or categories.

#Importing necessary libraries

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

#Load the Iris dataset (a commonly used dataset for classification)

data = load_iris()

X, y = data.data, data.target

#Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

#Create a k-Nearest Neighbors classifier

classifier = KNeighborsClassifier(n_neighbors=3)

#Train the classifier on the training data

classifier.fit(X_train, y_train)

#Make predictions on the test data

predictions = classifier.predict(X_test)

#Calculate the accuracy of the classifier

accuracy = accuracy_score(y_test, predictions)

print(f”Accuracy: {accuracy}”)

I have used the k-Nearest Neighbors algorithm in the above code to classify the Iris dataset into three classes (setosa, versicolor, and virginica). Then I calculated the accuracy of the classifier to evaluate its performance.

Classification makes business life easy as outputs or predictions are sets of possible finite values, i.e., outcomes. Whether an email is spam or not can be identified as a classification problem since it’s a binary classification with only two classes. Some of the useful examples in this category are

- Determining an email is spam or not

- The outcome can be a binary classification / logistic regression

- Something is harmful or not

- Rainfall tomorrow or no rainfall.

Segmenting business customers, audio and video classification, and text processing for sentiment analytics are examples of multiple labels as output. Besides logistic regression, the most famous algorithms in the method are K-nearest neighbours and decision trees.

Regression – Numbers Game

The system attempts to predict a value for an input based on past data. Regression outputs are real-valued numbers that exist in a continuous space. It’s a very useful method for predicting continuous outputs. In a regression predictive model, the predictions come out as quantities, so the performance of the model is evaluated at the error margin level, i.e., errors in predictions.

#Importing necessary libraries

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

#Load the Boston Housing dataset (a commonly used dataset for regression)

data = load_boston()

X, y = data.data, data.target

#Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

#Create a Linear Regression model

regressor = LinearRegression()

#Train the model on the training data

regressor.fit(X_train, y_train)

#Make predictions on the test data

predictions = regressor.predict(X_test)

#Calculate the mean squared error of the model

mse = mean_squared_error(y_test, predictions)

print(f”Mean Squared Error: {mse}”)

In the above code, I have used Linear Regression to predict the housing prices in the AILabPage (Boston) Housing dataset. I have measured the model’s performance using the mean squared error, which quantifies the average squared difference between the predicted and actual housing prices.

One of the most used and underrated algorithms for its simplicity is linear regression. As it is simple to understand and use, it has gained the most popularity. Linear regression is an extremely versatile method that can be used for predicting

- The temperature of the day or in an hour

- Likely housing prices in an area

- Likelihood of customers to churn

- Revenue per customer.

Another good example of a regression problem is when we have time-ordered inputs, or so-called time-series data, for forecasting problems. In simple terms, regression is useful for predicting outputs that are continuous. The predictive model here is a kind of task for approximating a mapping function for mapping input to the continuous output variable.

Function Approximation in Classification and Regression

Finding the best function to map input variables to output variables is the main task for any machine learning algorithm.

In both cases, i.e., Classification and Regression function approximations, the approximations are different, hence they are two different tasks.

Why function is important; you can read How Machine Learning Algorithms Work to know more details.

After finding the best model with the best algorithm as the underlining foundation, it becomes super simple to get the best mapping function. System resources, time in hand, amount of data, and quality of data are critical parts of the algorithm of choice.

The end goal is to build predictive modelling, which is built on past data, to find answers from new or future data. This is a basic mathematical problem.

Classification vs Regression

As is clear from the above explanations of classification and regression definitions, these two methods are used in supervised learning depending on the input variables mapped to output values or labels.

Example– Imagine there is a need to launch a product by your company and as head of the product rollout you want to do some research and analytics work to know whether a product will be successful or not. What is required here?

Data of similar products running in the market and data of similar products failed and went out of market including

- Price charged

- Marketing budget

- Competitor price

- Target customer segmentation i.e education level, earnings, age, home & work address, any buying habit etc

- Market size

There could be several other variables to add for making our predictive model error-free and successful etc.

Classification and Regression – Algorithms

Some of the algorithms used in regression and classification are mentioned below. We will not define the algorithms mentioned in the above picture; These were defined in our previous post.

In upcoming posts, we will try to take each one of them in a detailed way, including their definition, use cases, flow, etc. For now, let’s just keep our focus on the two-supervised learning method. Example: a pair consisting of an advice object, i.e., typically a vector, and the desired output value

Conversion Between Classification and Regression

In some cases, it is possible to convert a regression problem to a classification problem and vise-a-versa

- Regression to Classification: In short, this is a conversion of cardinal numbers to an ordinal range by giving class names to values. Here, continuous values get converted into discrete buckets. Creating a bucketing system, i.e., classifying spending through credit cards for a value range of $0-$1000 into classes as below

- $0 to $200 assigned to Class-1

- $201 to $500 assigned to Class-2

- $501 to $1000 assigned to Class-3

- Classification to Regression -: Converting ordinal range into cardinal values i.e discrete buckets to continuous values. After reversing the above example by changing a class value to continues range we get results as below

- Class-1 assigned to value range $0 to $200

- Class-2 assigned to value range $201 to $500

- Class-3 assigned to value range $501 to $1000

A word of caution: mapping error for continuous range often occurs, which results in bad performance of the model. As explained above, classification is a supervised learning problem. It targets the input data for the classification task. Some of the examples where this is used are loan application approvals, medical diagnoses, email classification, etc.

Machine learning and its algorithms are either supervised or unsupervised, Traditional machine learning focuses on feature engineering; deep learning focuses on end-to-end learning based on raw features.

Conclusion – Classification and Regression are powerful tools that enable machines to learn from historical data and make informed decisions or predictions on new data based on the knowledge gained during the learning process. I have elaborated our earlier posts on Machine learning algorithms for understanding classification and regression techniques under supervised learning. The main focus was on highlighting the difference between classification and regression problems. In short, it’s easy to say in a regression problem; the system attempts to predict a value for an input based on past data. In classification, predictions are made by classifying them into different categories.

—

Books + Other readings Referred

- Research through Open Internet – NewsPortals, Economic development report papers and conferences.

- AILabPage (group of self-taught engineers) members hands-on lab work.

Feedback & Further Question

Do you have any questions about Machine Learning, Data Science or Data Analytics? Leave a comment or ask your question via email. Will try my best to answer it.

Points to Note:

A classifier instrument utilises some training data to understand how given input variables relate to the class for example spam email or not a spam email. All credits if any remains on the original contributor only.

============================ About the Author =======================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter ====================================================================

[…] Linear Regression – Simple Linear Regression. […]

This is awesome, machine learning will change everything

Nice post. Thanks for sharing the valuable information. it’s really helpful. Who want to learn this blog most helpful. Keep sharing on updated posts…

Data Science Certification Training | Data Science Certification Course | Data Science Online Training

Machine learning is very disruptive

Your amazing insightful information entails much to me and especially to my peers. Thanks a ton; from all of us. ExcelR Machine Learning Course Pune

Big Data or the ‘unstructured’ data produced by individuals every day in the form of photos, messages,

or videos that are not structured in form, so a data scientist is basically there to arrange this data more flowing manner.

[…] Linear Regression – Simple Linear Regression. […]

A popular training institute for data science and other emerging technologies. Offer comprehensive training programs in data science, artificial intelligence, machine learning, big data analytics, and more. While I don’t have the specific details about their current offerings as my knowledge cutoff is in September 2021, I can provide you with a general idea of what you can expect.

I loved reading your blog post. The information you provided was very valuable and insightful. Keep up the great work!

I appreciate your insights, and personally, I find Machine Learning to be exceptionally thrilling. The continuous quest for refining ML models leads to heightened accuracy, enabling the identification of intricate features and patterns within data. This, consequently, translates into more precise decisions and predictions.