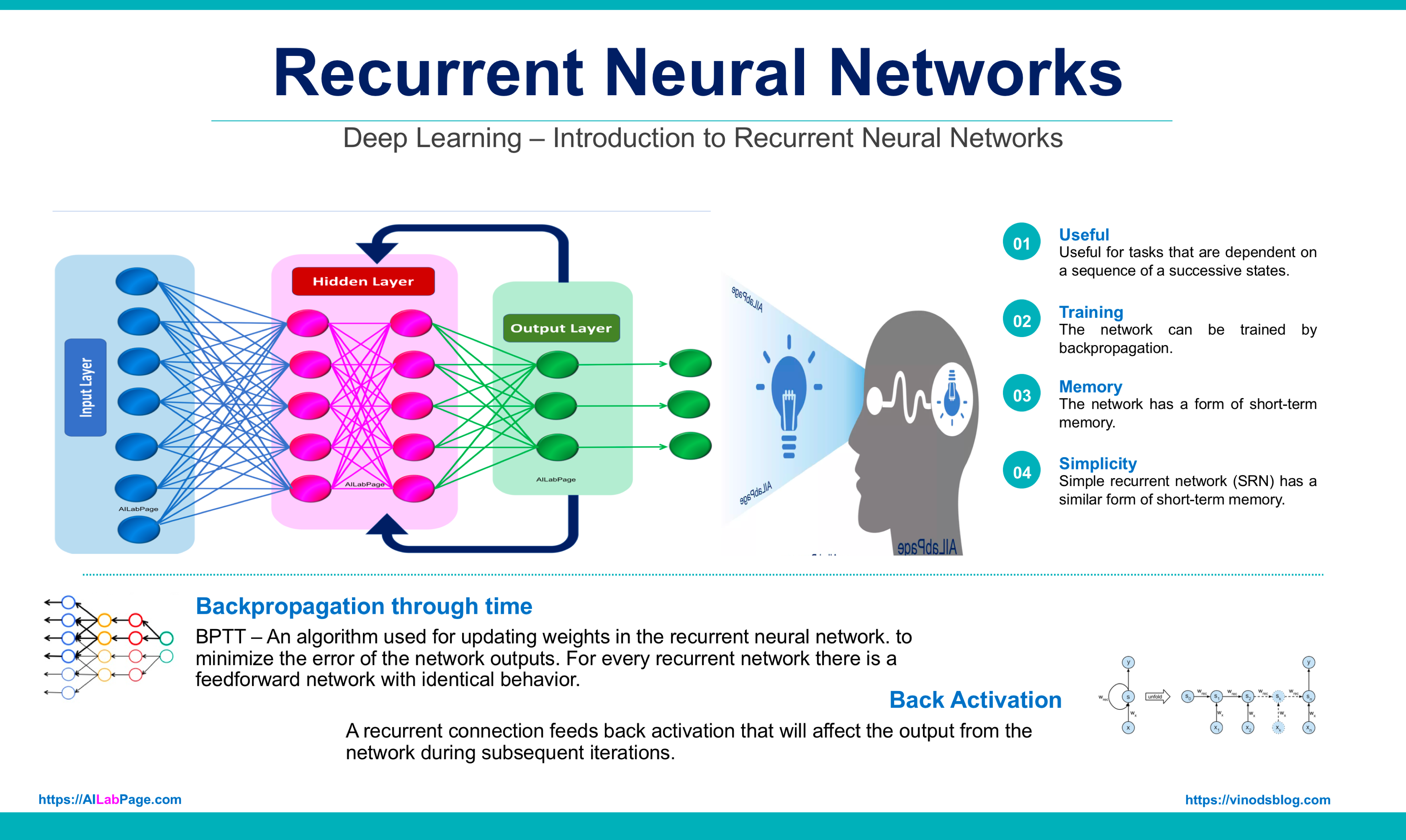

Recurrent Neural Networks: The main use of RNNs is when using Google or Facebook; these interfaces can predict the next word that you are about to type. RNNs have loops to allow information to persist. This reduces the complexity of parameters, unlike other neural networks.

These neural nets are considered to be fairly good for modeling sequence data. Recurrent neural networks are a linear architectural variant of recursive networks. They have a memory,” so they differ from other neural networks. This memory remembers all the information about what was calculated in the previous state. It uses the same parameters for each input as it performs the same task on all the inputs or hidden layers to produce the output. These neural nets excel in modeling sequences, with a memory that remembers past calculations, using consistent parameters for all inputs.

This post is a high-level overview for creating a basic understanding. Don’t expect too much if you are a PhD or master’s degree student. We will only focus on the intuition behind RNNs instead. You will get a little comfort from starting to dig deeper into RNNs.

As per the experience from AILabPage’s lab sessions, we can conclude and say – One lesser-known fact about Recurrent Neural Networks is their ability to perform dynamic computation on sequences of data, allowing them to capture temporal dependencies effectively.

Artificial Neural Networks – What Is It

In 1943, McCulloch and Pitts designed the first neural network. Artificial neural networks were modelled on a simplified version of human brain neurons. As per wiki “Recurrent neural network is a class of artificial neural network where connections between nodes form a directed graph along a sequence.” This allows it to exhibit temporal dynamic behaviour for a time sequence.

There are several kinds of Neural Networks in deep learning.

- Multi-Layer Perceptron

- Radial Basis Network

- Recurrent Neural Networks

- Generative Adversarial Networks

- Convolutional Neural Networks.

As per AILabPage, Artificial neural networks (ANNs) are “Complex computer code written with several simple, highly interconnected processing elements that is inspired by human biological brain structure for simulating human brain working and processing data (Information) models”.

It is important to note that artificial neural networks are way different from computer programs, so please don’t get the wrong perception from the above definition. Neural networks consist of input and output layers and at least one hidden layer.

Training neural networks can be hard, complex, and time-consuming. The reasons are simply known to data scientists. One of the major reasons for this hardship is weight. In Neural networks, weights are highly interdependent with hidden layers. The three main steps to training neural networks are

- Forward pass and makes a prediction.

- Compare prediction to the ground truth using a loss function.

- Error value to do backpropagation

The algorithm to train ANNs depends on two basic concepts: first, reducing the sum squared error to an acceptable value, and second, having reliable data to train the network under supervision.

Recurrent Neural Networks- Introduction

Recurrent neural networks are not too old neural networks, they were developed in the 1980s. One of the biggest uniquenesses RNNs have is their “UAP” (universal approximation property), so they can approximate virtually any dynamical system.

This unique property forces us to say that recurrent neural networks have something magical about them.

- RNNs takes input as time series and provide an output as time series,

- They have at least one connection cycle.

There is a strong perception of the recurrent neural network training part. The training is assumed to be super complex, difficult, expensive, and time-consuming. As a matter of fact, after a few hands-on experiences in our lab, our response is just the opposite. So common wisdom is completely opposite from reality. The robustness and scalability of RNNs are super exciting compared to traditional neural networks and even convolutional neural networks.

Recurrent Neural Networks are way more special as compared to other neural networks. Non-RNN APIs have too many constraints and limitations (sometimes RNNs also do the same, though). Non-RNN API take

- Input – Fixed size vector: For example an “image” or a “character”

- Output – Fixed size vector: Probability matrix

- Size of Neuron – Fixed number of layers / computational steps

We need to answer “What kind of problems can be solved with “Recurrent Neural Networks”? before we go any deeper in this.

Solving Complex Problems with Sequential Data

Recurrent Neural Networks (RNNs) are well-suited for solving a wide range of problems that involve sequential or time-series data. Some of the key problems that can be effectively addressed using RNNs include

- Natural Language Processing (NLP): RNNs are commonly used in tasks such as language translation, sentiment analysis, text generation, and speech recognition. Their ability to capture temporal dependencies in language sequences makes them valuable for understanding and generating human language.

- Time Series Analysis: RNNs are ideal for time series prediction and forecasting, enabling accurate predictions based on historical data. They can be applied to financial forecasting, stock market analysis, weather prediction, and other time-dependent data analysis tasks.

- Speech Recognition: RNNs excel in converting spoken language into written text. They can process audio data over time to recognize speech patterns and convert them into textual representations.

- Music Generation: RNNs can be used in music composition and generation tasks. By learning patterns and structures from existing music, RNNs can create new musical pieces that follow similar styles and harmonies.

- Video Analysis: RNNs can be applied to video analysis tasks, such as action recognition, object tracking, and activity forecasting. They can process sequential frames of a video to understand temporal patterns and movements.

- Natural Language Generation: RNNs can generate coherent and contextually relevant text based on given prompts or input, making them useful in chatbots, automatic summarization, and creative writing applications.

- Sentiment Analysis: RNNs can determine the sentiment of a given piece of text, classifying it as positive, negative, or neutral, which is valuable for sentiment analysis in customer feedback and social media data.

- Gesture Recognition: RNNs can recognize gestures from motion capture data, making them applicable in virtual reality and human-computer interaction systems.

These are just a few examples of the diverse problems that RNNs can effectively solve. Their ability to handle sequential data and capture temporal dependencies makes them a powerful tool for a wide range of real-world applications across various industries.

When we deal with RNNs, they show excellent and dynamic abilities to deal with various input and output types. Before we go deeper, let’s look at some real-life examples.

- Varying Inputs and Fixed Outputs: Speech, Text Recognition, and Sentiment Classification: In today’s time, this can be the biggest relief for a bomb like social media to kick out negative comments. People who like to give only negative comments for anything and everything rather than helping have one motive: to pull him/her down) someone’s efforts. Classifying tweets and Facebook comments into positive and negative sentiments becomes easy here. Inputs with varying lengths, while the output is of a fixed length.

- Fixed Inputs and Varying Outputs: AILabPage’s Image Recognition (Captioning) transcends visual comprehension barriers. Employing advanced algorithms, it interprets single-input images, producing detailed captions. From lively scenes of children riding bikes to serene park moments and dynamic sports activities like football or dancing, its output flexibility accommodates varied contexts. AILabPage’s pioneering solution redefines image processing capabilities, promising versatile applications across industries, from enhancing accessibility for visually impaired individuals to streamlining content indexing for businesses.

- Varying Inputs and Varying Outputs: Varying Inputs and Varying Outputs: AILabPage’s Machine Translation and Language Translation revolutionize cross-lingual communication. Human translation, a laborious endeavor, is surpassed by innovative algorithms. AILabPage’s solution, akin to Google’s online translation, excels in holistic text interpretation. It not only conveys words but also preserves sentiments, adapts to varied input lengths, and contextualizes meanings. This transformative capability extends to both input and output variations, promising enhanced language accessibility and seamless global communication.

As evident from the cases discussed above, Recurrent Neural Networks (RNNs) excel at mapping inputs to outputs of diverse types and lengths, showcasing their versatility and broad applicability.

The underlying foundation of RNNs lies in their ability to handle sequential data, making them inherently suitable for tasks involving time series, natural language processing, audio analysis, and more. The generalized nature of RNNs allows them to adapt and learn from temporal dependencies in data, enabling them to tackle a wide range of problems and deliver meaningful insights in various domains.

Their capacity to capture context and temporal relationships in sequential data makes RNNs a valuable tool for addressing real-world challenges, where the length and complexity of input-output mappings may vary considerably. By leveraging this inherent flexibility, developers and researchers can employ RNNs as a fundamental building block for constructing innovative and sophisticated models tailored to their specific data-driven needs.

Recurrent Neural Networks & Sequence Data

As we know by now, RNNs are considered to be fairly good for modeling sequence data. Let’s understand sequential data a bit. While playing cricket, we predict and run in the direction where the ball moves. This means recurrent networks take current input examples they see and also what they have perceived previously in time. This happens without any guessing or calculation because our brain is programmed so well that we don’t even realize why we run in a ball’s direction.

If we look at the recording of ball movement later, we will have enough data to understand and match our action. So this is a sequence—a particular order in which one thing follows another. With this information, we can now see that the ball is moving to the right. Sequence data can be obtained from

- Audio files: This is considered a natural sequence. Audio file clips can be broken down in the audio spectrogram and fed into RNNs.

- Text file: Text is another form of sequence; text data can be broken into characters or words (remember search engines guessing your next word or character).

Can we comfortably say that RNNs are good at processing sequence data for predictions based on our examples above? RNNs are gaining more attraction and popularity for one core reason: they allow us to operate over sequences of vectors for input and output, not just fixed-size vectors. On the downside, RNNs suffer from short-term memory.

Use cases – Recurrent Neural Networks

Let’s understand some of the use cases for recurrent neural networks. There are numerous exciting applications that got a lot easier, more advanced, and more fun-filled because of RNNs. Some of them are listed below.

- Music synthesis

- Speech, text recognition & sentiment classification

- Image recognition (captioning)

- Machine Translation – Language translation

- Chatbots & NLP

- Stock predictions

To comprehend the intricacies of constructing and training Recurrent Neural Networks (RNNs), including widely utilized variations like Gated Recurrent Units (GRUs) and Long Short-Term Memory (LSTM) networks, it is essential to delve into the fundamental principles and underlying mechanisms of these sophisticated architectures.

Mastering the art of RNNs entails grasping the concept of sequential data processing, understanding the role of recurrent connections in retaining temporal information, and exploring the challenges posed by vanishing or exploding gradients. By gaining proficiency in these techniques, developers and researchers can harness the power of RNNs to address a myriad of real-world problems, ranging from natural language processing and speech recognition to time series analysis and beyond.

There are a lot of free and paid courses available on the internet. At AILabPage, we also conduct hands-on classroom training in our labs to train deep learning enthusiasts. These courses can help you solve natural language problems, including text synthesis. Ultimately, you will have the opportunity to build a deep learning project with cutting-edge, industry-relevant content.

RNN models have demonstrated exceptional performance in handling temporal data. They encompass various variations, such as LSTMs (long short-term memory), GRUs (gated recurrent units), and Bidirectional RNNs. These sequence algorithms have significantly simplified the process of constructing models for natural language, audio files, and other types of sequential data, making them more accessible and effective in various applications.

Vanishing and Exploding Gradient Problem

The deep neural network has a major issue around gradients as it is very unstable. Due to its unstable nature, it tends to either explode or vanish from earlier layers quickly.

The vanishing gradient problem emerged in the 1990s as a major obstacle to RNNs’ performance. In this problem, adjusting weights to decrease errors and the “synch problem” lead the network to cease to learn at the very early stage itself.

The problem encountered by Recurrent Neural Networks (RNNs) had a significant impact on their popularity and usability. This issue arises from the vanishing or exploding gradients phenomenon, which occurs when the RNN attempts to retain information from previous time steps. The nature of RNNs to maintain a memory of past values can lead to confusion, causing the current values to either skyrocket or plummet uncontrollably, overpowering the learning algorithm.

As a result, an undesirable situation of indefinite loops arises, disrupting the network’s ability to make further progress and effectively bringing the entire learning process to a standstill. This challenge posed significant obstacles to the practical application and widespread adoption of RNNs, prompting researchers and developers to seek alternative architectures and techniques, such as LSTMs (Long Short-Term Memory) and GRUs (Gated Recurrent Units), which have proven more effective in addressing the vanishing and exploding gradient issues while preserving the temporal dependencies in sequential data.

For example, neurons might get stuck in a loop where they keep multiplying the previous number by a new number, which can go to infinity if all numbers are more than one or get stuck at zero if any number is zero. And it depends on how much time you have. For us at AILabPage, we say machine learning is a crystal-clear and simple task. It is not only for PhD aspirants; it’s for you, us, and everyone.

Not Covered here

Topics we have not covered in this post but are extremely critical and important to understand to get a little more strong hands-on RNNs as below.

- Sequential Memory

- Backpropagation in a Recurrent Neural Network(BPTT)

- LSTM’s and GRU’s

Conclusion – I particularly think that getting to know the types of machine learning algorithms actually helps to see a somewhat clear picture. The answer to the question “What machine learning algorithm should I use?” is always “It depends.” It depends on the size, quality, and nature of the data. Also, what is the objective/motive of data torturing? As more we torture data more useful information comes out. It depends on how the math of the algorithm was translated into instructions for the computer you are using. In short, understanding the nuances of various algorithms enables better decision-making in selecting the most suitable approach for a given task.

—

Points to Note:

All credits if any remain on the original contributor only. We have covered all basics around Recurrent Neural Networks. RNNs are all about modelling units in sequence. The perfect support for Natural Language Processing – NLP tasks. Though often such tasks struggle to find the best companion between CNN’s and RNNs’ algorithms to look for information.

Books + Other readings Referred

- Research through open internet, news portals, white papers and imparted knowledge via live conferences & lectures.

- Lab and hands-on experience of @AILabPage (Self-taught learners group) members.

- This useful pdf on NLP parsing with Recursive NN.

- Amazing information in this pdf as well.

Feedback & Further Question

Do you have any questions about Deep Learning or Machine Learning? Leave a comment or ask your question via email. Will try my best to answer it.

======================= About the Author =======================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter

============================================================

[…] Recurrent Neural Networks […]

[…] Recurrent Neural Networks […]

I want to say thanks to you. I have bookmark your site for future updates. ExcelR Data Scientist Course Pune

[…] Convolutional Neural Networks applications solve many unsolved problems that could remain unsolved without convolutional neural networks with many layers, include high calibres AI systems such as AI-based robots, virtual assistants, and self-driving cars. Other common applications where CNNs are used as mentioned above like emotion recognition and estimating age/gender etc The best-known models are convolutional neural networks and recurrent neural networks […]

[…] There are some specialized versions also available. Such as convolution neural networks and recurrent neural networks. These addresses special problem domains. Two of the best use cases for Deep Learning which are […]

[…] https://vinodsblog.com/2019/01/07/deep-learning-introduction-to-recurrent-neural-networks/ […]

Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

Correlation vs Covariance

Simple linear regression

data science interview questions

Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

Correlation vs Covariance

Simple linear regression

data science interview questions

Amazing Article ! I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

Simple Linear Regression

Correlation vs covariance

data science interview questions

KNN Algorithm

very well explained .I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

Simple Linear Regression

Correlation vs covariance

data science interview questions

KNN Algorithm

Logistic Regression explained

This Was An Amazing ! I Haven’t Seen This Type of Blog Ever ! Thankyou For Sharing, data science course in hyderabad with placements

[…] Deep Learning – Introduction to Recurrent Neural Networks […]

Such a very useful information!Thanks for sharing this useful information with us. Really great effort.

data scientist courses aurangabad

Informative blog

Data science course in pune

Amazing Article! I would like to thank you for the efforts you had made for writing this awesome article. This article inspired me to read more. keep it up.

Data science course in pune

Very informative message! There is so much information here that can help any business start a successful social media campaign!

With the advancement in technology, users are now expecting a web app.

data science course in pondicherry

Thanks for sharing good information I read this post I really like this article.

artificial intelligence training in Hyderabad

In this article, you will read the basic details of both of these languages, and then it will be easy for you to make a decision that is R is easier to learn than Python.data science course in jalandhar

Get dual certification from IBM and UTM, Malaysia with a single Data Science Course at 360DigiTMG. Enroll now for a successful tomorrow!

data science course fees in hyderabad

When one thinks about data science, there might be word machine learning comes into the mind.data science course in nashik

This is additionally a generally excellent post which I truly delighted in perusing.

It isn’t each day that I have the likelihood to see something like this..

data science course in pune

360DigiTMG provides exceptional training in the Data Science course with placements. Learn the strategies and techniques from the best industry experts and kick start your career.data analytics course in jalandhar

All things considered I read it yesterday yet I had a few musings about it and today I needed to peruse it

again in light of the fact that it is very elegantly composed.

full stack data scientist course in Malaysia

I am searching for and I love to post a remark that “The substance of your post is wonderful” Great work!

data analytics course in pune

Python is more popular than R, which is why most organizations use it. R’s functionality is beneficial. That is why the companies prefer it for the beginning of their projects.data science training in dombivli

This post is very simple to read and appreciate without leaving any details out. Great work!data science course in chennai

This is the first time I visit here. I found such a large number of engaging stuff in your blog, particularly its conversation. From the huge amounts of remarks on your articles,

I surmise I am by all accounts not the only one having all the recreation here! Keep doing awesome.

I have been important to compose something like this on my site and you have given me a thought.

Cool you write, the information is very good and interesting, I’ll give you a link to my site.

data analytics course in Hyderabad.

Hi, I have read a lot from this blog thank you for sharing this information. We provide all the essential topics in Data Science Course In Chennai like, Full stack Developer, Python, AI and Machine Learning, Tableau, etc. for more information just log in to our website

Data science course in chennai

Hi, I have read a lot from this blog thank you for sharing this information. We provide all the essential topics in Data Science Course In Dehradun like, Full stack Developer, Python, AI and Machine Learning, Tableau, etc. for more information just log in to our website

Data science course in Dehradun

Hi, I have read a lot from this blog thank you for sharing this information. We provide all the essential topics in Data Science Course In Bhopal like, Full stack Developer, Python, AI and Machine Learning, Tableau, etc. for more information just log in to our website

Data science course in bhopal

[…] Recurrent Neural Networks […]

If you want to know more about data science, certification courses available in this field and placement opportunities upon completion of the courses, this post is the right place to be. It takes you through all of 360digiTMG’s courses in detail and guides you to pick the one that is most suitable for you.data science training certification in hyderabad

[…] Recurrent Neural Networks […]

[…] recurrent neural networks models comes very handy to translate language. Through interactive exercises and using […]

[…] Deep Learning – Introduction to Recurrent Neural Networks […]

[…] Deep Learning – Introduction to Recurrent Neural Networks […]

[…] Recurrent Neural Networks […]

I recommend everyone to read this blog, as it contains some of the best ever content you will find on data science. The best part is that the writer has presented the information in an attractive and engaging manner. Every line gives you something new to learn, and this itself tells volumes about the quality of the information presented here.

[…] Recurrent Neural Networks (RNN) – A neural network to understand the context in speech, text or music. The RNN allows information to loop through the network, […]

[…] Recurrent Unit – The GRU is a variant of the Recurrent Neural Network (RNN) architecture designed to overcome the challenges posed by the vanishing gradient […]

[…] in the past, certain deep learning architectures, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have taken precedence over others because of their effectiveness in various applications, as […]

I enjoyed reading about the latest trends and advancements in the field of data science in this post.

data science institutes in hyderabad

[…] Deep Learning – Introduction to Recurrent Neural Networks […]

This captivating blog on “Deep Learning – Introduction to Recurrent Neural Networks” is a commendable exploration of a complex topic, offering clarity and depth to readers. The author’s ability to demystify recurrent neural networks showcases a rare talent for making intricate concepts accessible and engaging. The insightful analysis and clear explanations make this blog an invaluable resource for anyone diving into the world of deep learning.

I appreciate you offering such lovely items. I learned something from your blog. Continue sharing. Your insightful blog post on ‘Deep Learning – Introduction to Recurrent Neural Networks’ expertly navigates the complexities of this cutting-edge technology, providing a clear and engaging entry point for both novices and seasoned enthusiasts. Your ability to distil intricate concepts into digestible insights truly makes this a valuable resource for anyone delving into the world of deep learning.

I want to say thanks to you. I have bookmark your site for future updates. Ataşehir Çekici

“Deep Learning – Introduction to Recurrent Neural Networks” offers a compelling journey into the intricate world of neural networks, specifically focusing on the versatile domain of Recurrent Neural Networks (RNNs). The content provides a clear and insightful introduction, unravelling the complexities of RNNs with clarity and precision. It adeptly navigates through the foundational concepts, making this advanced topic accessible to learners at all levels.

The appreciation extends to the seamless blend of theoretical insights and practical applications, fostering a comprehensive understanding. Overall, this resource stands as an invaluable guide, bridging the gap between theory and implementation in the realm of deep learning.

In short, I enjoyed reading about the latest trends and advancements in the field of Recurrent Neural Networks in this post.

Thank you for sharing this information. I wanted to mention that I’ve visited your website and found it to be both intriguing and informative. I’m looking forward to exploring more of your posts. Allow me to share link for my personal promotion here. Data Science Course in Chennai

The explanation is very clear, and the presentation is neat. Thank you for sharing this article. Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to process sequences of data. They work especially well for jobs requiring sequences, such as time series data, voice, natural language, and other activities. RNN works on the principle of saving the output of a particular layer and feeding this back to the input in order to predict the output of the layer. please allow me to promote my company, Have a look at our training

Artificial Intelligence Course in Mumbai

Thank you for publishing this blog. RNNs are a type of neural network that can be used to model sequence data. RNNs, which are formed from feedforward networks, are similar to human brains in their behaviour. Simply said, recurrent neural networks can anticipate sequential data in a way that other algorithms can’t.

Excellent post! The information provided is wonderful and incredibly useful. Thank you for sharing and please continue to update. Please allow me to promote my company courses on your site. Thank you once again

Data Science Course in Chennai

Really Enjoyed.

[…] Recurrent Neural Networks […]

Fantastic! You’ve provided clear explanations. It’s very helpful for me to learn about new things. Please allow me to promote my stuff