Mandate for Humans – “Deep learning, once considered a domain exclusive to machines, has now become a mandate for humans as well. With the exponential growth of data and the increasing complexity of problems, humans need to delve into the intricacies of deep learning to unlock its potential.

Understanding neural networks, optimizing architectures, and interpreting results are vital skills in today’s data-driven world. Whether it’s for research, innovation, or practical applications, embracing deep learning empowers individuals to harness the transformative power of artificial intelligence and contribute meaningfully to advancements in various fields. Deep Learning. This is part 2 story in “AILabPage’s DeepLearning Series”. The focus here is on deep learning’s basic terms which revolve and evolve around it. Find the first part here – DeepLearning Basics : Part-1

Deep learning holds a hidden gem: its capacity to self-evolve. This ability, known as “learning to learn,” allows deep learning models to continuously refine themselves, surpassing initial capabilities without human intervention. This autonomous improvement ensures adaptability and robustness, making deep learning indispensable for tackling complex real-world problems.

What we will cover here

Deep Learning terminology can be quite overwhelming to newcomers. So with this in mind, this blog post will cover the important aspect of deep learning at a very high level. Below are the few discussion points for this post.

- Basics Around Sciences

- What is Deep Learning (Helicopter View)

- Deep learning Computational Models

- How Deep learning learns.

- Frequently used jargons in deep learning

- Deep learning Algorithms – High-level view

- Implementation of Deep Learning Models

- Deep learning limitations

- Notable Use Cases & Applications

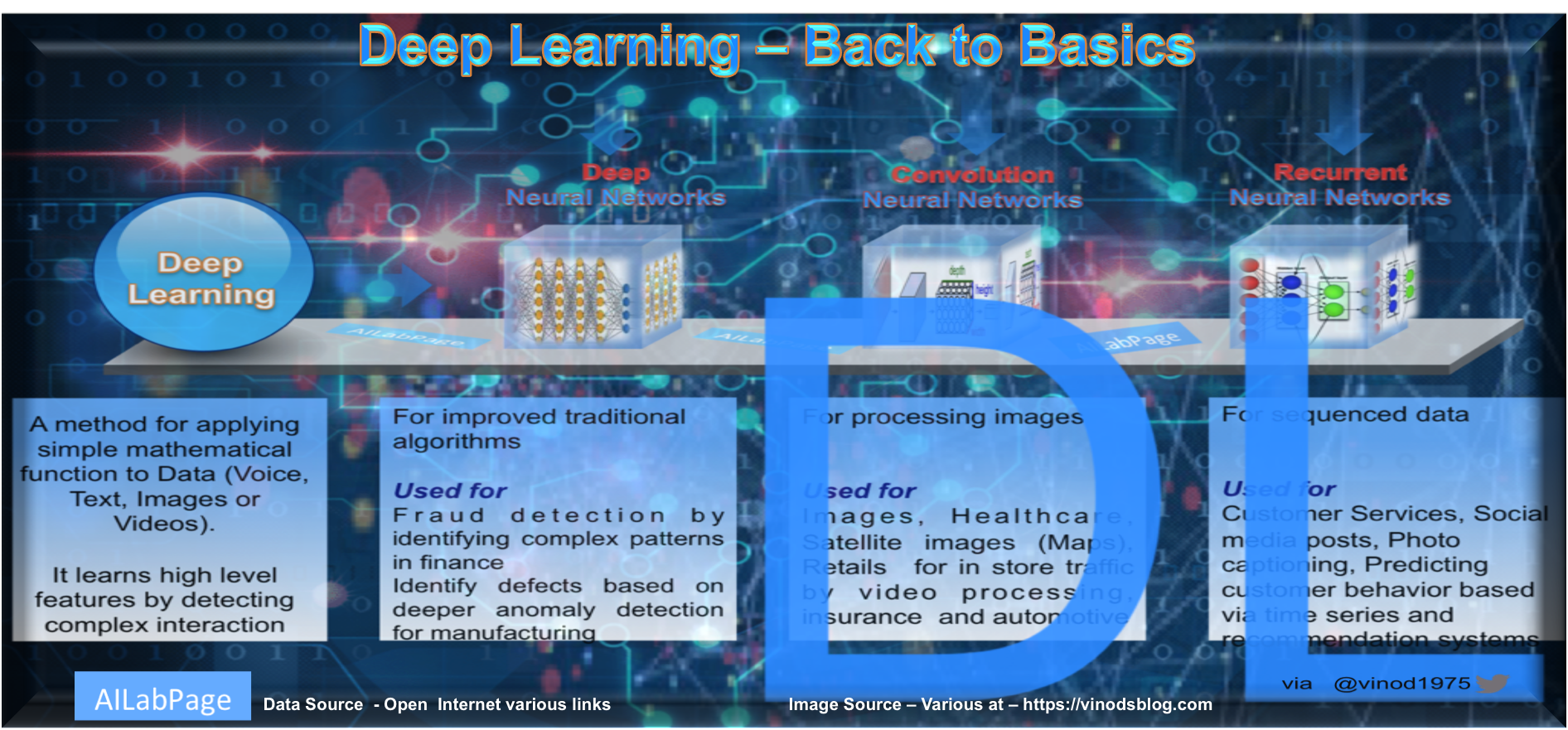

In AILabPage style, DL can be defined as a set of techniques that uses neural networks to simulate human decision-making skills.

Basics Around Sciences – Mandate for Humans

Before we go deeper in deep learning the main agenda of this blog post, let’s understand basic of basics. 2 types of sciences we see on almost every day around us i.e

- Hard Sciences – Physics, chemistry, biology etc.

- A computer engineer can develop some system architecture and system model that can actually take shape later in reality and do as claimed.

- Soft Sciences – Economics, political science etc.

- Sales/marketing teams can give an amazing presentation about how certain product will do over the next five years. In returns expect the good budget for the same, and yet they can fail with a big chance.

So the difference is pretty clear i.e. hard science can make complex models of the world that work but soft does not have any such ability. Deep learning falls under hard science.

What is Deep Learning (Helicopter View)

Deep learning is a technique or an artificial intelligence power that teaches computers tasks and the ability to understand anything. This process is as similar as it comes naturally to humans i.e. learn by example. A key advantage of deep learning networks is that they often continue to improve as the size of your data increases.

Driverless cars, speech recognition, and image processing are the key solutions powered by this technology. Cars get the ability to understand the difference between a pedestrian and a lamppost. Image processing also helps to recognize road signs and distinguish them based on marking. Deep learning is getting lots of attention lately and for good reason. It’s achieving results that were not possible before.

Deep learning Computational Models

The human brain is a deep and complex recurrent neural network. Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction. In very simple words and not to confuse anything/anyone here, we can define both models as below.

- Feedforward propagation – Type of Neural Network architecture where the connections are “fed forward”only i.e. input to hidden to output The values are “fed forward”.

- Backpropagation (supervised learning algorithm) is a training algorithm with 2 steps:

- Feedforward the values

- Calculate the error and propagate it back to the layer before.

In short, forward-propagation is part of the backpropagation algorithm but comes before back-propagating.

How Deep Learning Learns

The computational models of brain information processing in vision and beyond, have largely shallow architectures performing simple computations. Human brains to date have dominated computational neuroscience and will keep doing so for the next couple of decades. Most deep learning methods use neural network architectures, which is why deep learning models are often referred to as deep neural networks.

Deep learning is based on multiple levels of features or representation in each layer with the layers forming a hierarchy of low-level to high-level features. Traditional machine learning focuses on feature engineering but deep learning focuses on end-to-end learning based on raw features.

Deep Learning is a machine learning method. It allows doing predictive analytics outputs form given a set of inputs. DL can use supervised and unsupervised learning to train the model. The term “deep” usually refers to the number of hidden layers in the neural network. Traditional neural networks only contain 2-3 hidden layers, while deep networks can have 100 or even more.

Deep learning creates/ train-test splits of the data where ever possible via cross-validation. It loads training data into main memory and computes a model from the training data. Unlike in deep dream which can generate new images, or transform existing images and give them a dreamlike flavour, especially when applied recursively.

Frequently used jargons in deep learning

- Perceptrons – A single layer neural network. Perceptron is a linear classifier. It is used in supervised learning. In this computing structures are based on the design of the human brain and algorithms takes a set of inputs and returns a set of outputs.

- Multilayer Perceptron (MLP)- A Multilayer Perceptron is a Feedforward Neural Network with multiple fully-connected layers that use nonlinear activation functions to deal with data which is not linearly separable.

- Deep Belief Network (DBN) – DBNs is a type of probabilistic graphical model that learn a hierarchical representation of the data in an unsupervised manner.

- Deep Dream – A technique invented by Google that tries to distil the knowledge captured by a deep Convolutional Neural Network.

- Deep Reinforcement Learning (DRN) – This is a powerful and exciting area of AI research, with potential applicability to a variety of problem areas. Other common terms under this area are DQN, Deep Deterministic Policy Gradients (DDPG) etc.

- Deep Neural Network (DNN) - A neural network with many hidden layers. There is no hard-coded definition of how many layers minimum a deep neural network has to have. Usually minimum 4-5 or more.

- Recurrent Neural Networks (RNN) – A neural network to understand the context in speech, text or music. The RNN allows information to loop through the network,

- Convolutional Neural Network (CNN) – A neural networks, to do images recognition, processing and classifications. Objects detections, face recognition etc. are some CNNs expertise where it is widely used.

- Recursive Neural Networks – A hierarchical kind of network where with no time aspect to the input sequence but the input has to be processed hierarchically in a tree fashion.

Upcoming Points

The boundary between “Deep Learning and Machine Learning” is quite fuzzy, complex and simple at the same time. It is the key to voice control in consumer devices like phones, tablets, TVs, and hands-free speakers. Will cover below remaining points in next upcoming post

- Deep learning Algorithms – High level of view

- Implementation of Deep Learning Models

- Deep learning limitations

- Notable Use Cases & Applications

Conclusion – Deep learning would not exist if the digital revolution hadn’t made big data available. In this learning, the scope is much beyond machine learning. Deep Learning terminology can be quite overwhelming to newcomers. The algorithms used in this can be supervised or unsupervised.

It uses many layers of nonlinear processing units for feature extraction and transformation. Deep Learning techniques have become popular in solving traditional Natural Language Processing problems like sentiment analysis through RNN and image processing through CNN. Artificial Neurons can simply be called as a computational model of the human brain.

—

Points to Note:

All credits if any remains on the original contributor only. We have covered all basics around deep leaning for humans and the importance of quality data. In the next upcoming post will talk about implementation, usage and practice experience for markets.

Books + Other readings Referred

- Research through open internet, news portals, white papers and imparted knowledge via live conferences & lectures.

- Lab and hands-on experience of @AILabPage (Self-taught learners group) members.

Feedback & Further Question

Do you have any questions about AI, Machine Learning, Data Science or Big Data Analytics? Leave a question in a comment or ask via email. Will try best to answer it.

============================ About the Author =======================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter ====================================================================

[…] Deep Learning – Mandate for Humans, Not Just Machines […]