Deep Learning (DL) – A very young and limitless field. Its a class of machine learning where theories of the subject aren’t strongly established and views quickly change almost on a daily basis.

Deep Learning is strengthing artificial intelligence and is playing a major role in all AI-based innovations through its successful execution.

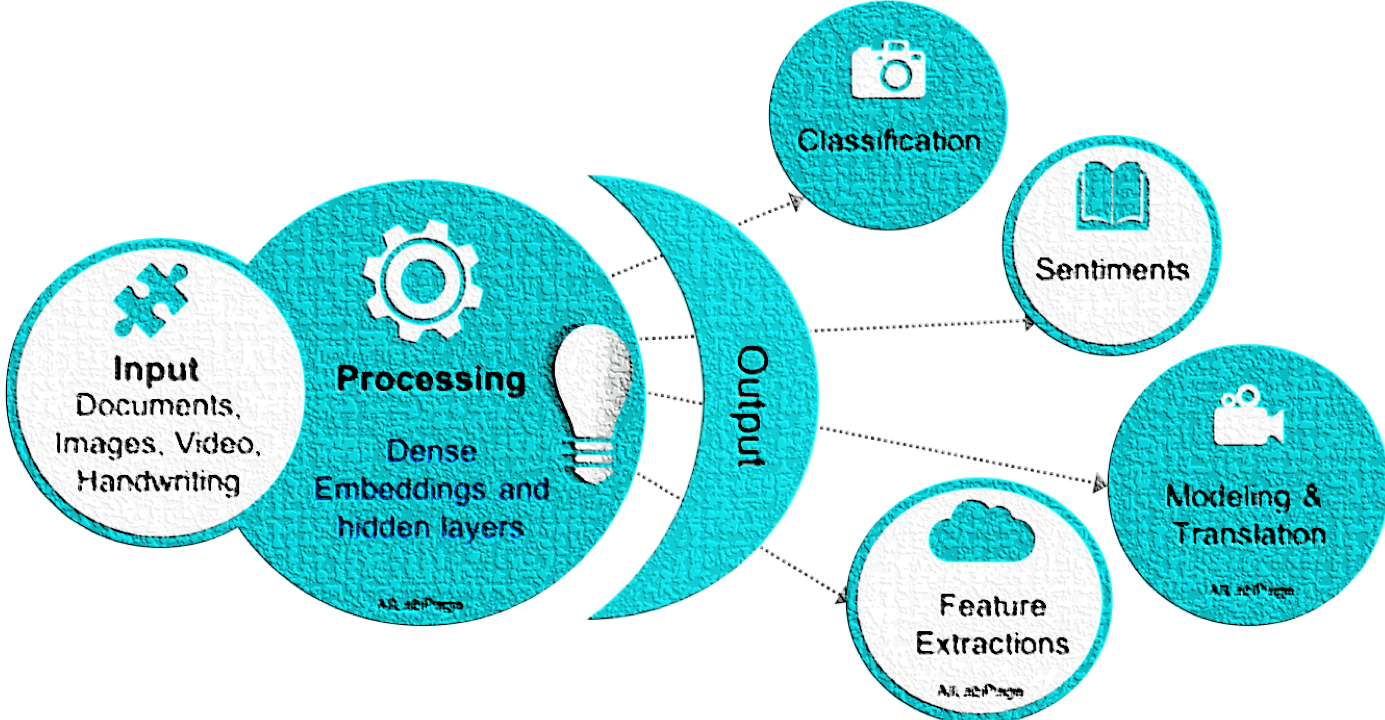

The principles of deep learning are racing ahead of time. It has the ability to analyse a large amount (provided the need for computing power and storage are met) of data to recognise patterns, extract feature or relevant info from images, videos, speech, social media traffic and many more.

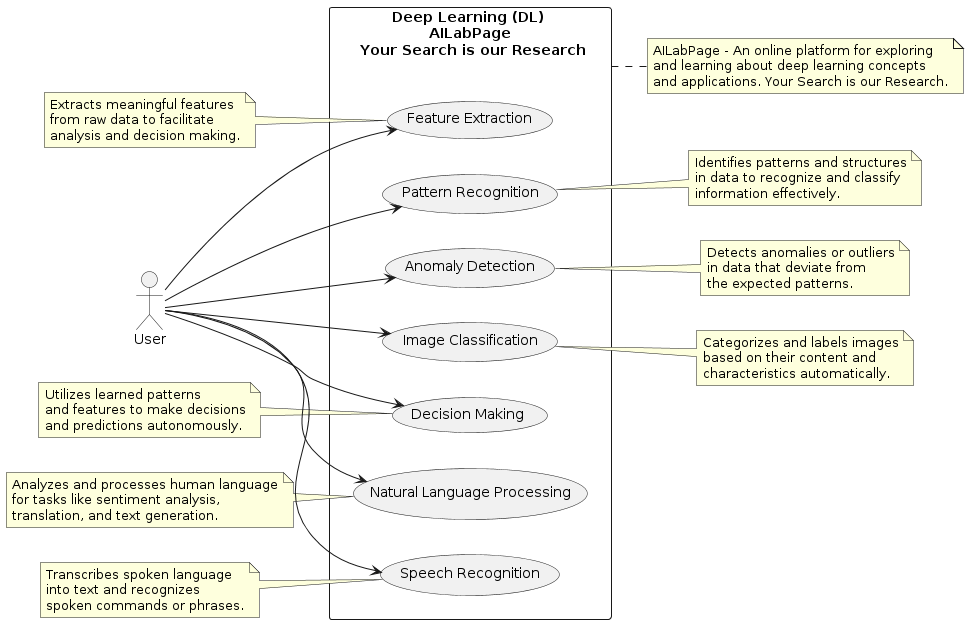

What is Deep Learning?

AILabPage defines Deep learning is “Undeniably a mind-blowing synchronisation technique applied on the bases of 3 foundation pillars large data, computing power, skills (enriched algorithms) and experience which practically has no limits“.

Deep learning is used with too much ease to predict the unpredictable. In our opinion “We all are so busy in creating artificial intelligence by using a combination of non-bio neural networks and natural intelligence rather than exploring what we have in hand.

Deep learning, also called as a subset of machine learning which is a specialist with an extremely complex skill set in order to achieve far more better results from the same data set. It purely on the basis of NI (Natural Intelligence) mechanics of the biological neuron system. It has a complex skill set because of methods it uses for training i.e. learning in deep learning is based on “learning data representations” rather than “task-specific algorithms.” which is the case for other methods

“I think people need to understand that deep learning is making a lot of things, behind the scenes, much-better” – Sir Geoffrey Hinton

Deep learning is a technique that approaches the problem by learning the underlying representations. That said, it is my impression that the representation-focused perspective of artificial neural networks is presently very popular.

Hoping that there would be no time when we need to do the reverse i.e. where will use Artificial Intelligence to create Natural Intelligence in future. So in nutshell, we need to be careful not to translate any of our research or machine learning into human experiences.

Deep Learning (DL) – Importance

Deep Learning is a part of AI emerging technologies bundle and collection of super performing algorithms and multilayer feature extraction techniques. That may be the reason why it’s also called representation learning.

At the moment DL is a leading technology breakthrough in AI domain. On the part of facing reality, there is a world of difference in how we imagine memories in our brains compared with conditioned responses versus how artificial neural networks can achieve the same.

It may not be completely wrong to say that deep learning is based on artificial neural networks. Current work and examples shown by deep learning is just a sample of what machines can do.

Deep Learning (DL) has revolutionised, image processing & classification and also speech recognition with high accuracy. Business leaders and developers community absolutely need to understand what it is, what it can do and how it works.

Deep Learning Computational Models

The human brain is a deep and complex recurrent neural network. Deep learning allows computational models that are composed of multiple processing layers to learn representations of data with multiple levels of abstraction. In very simple words, we can define the 2 models as below.

- Feedforward propagation – Type of neural network architecture where the connections are “fed forward” only i.e. input to hidden to output.

- Backpropagation train algorithm with 2 steps:

- Feedforward the values

- Calculate the error and propagate it back to the layer before.

- It uses a supervised learning algorithm

In short, forward-propagation is part of the backpropagation algorithm but comes before back-propagating. The day you are able to develop the mathematical and algorithmic underpinnings of deep neural networks from scratch and implement in your own neural network library in Python/any other language you are almost at the beginner stage of becoming pro.

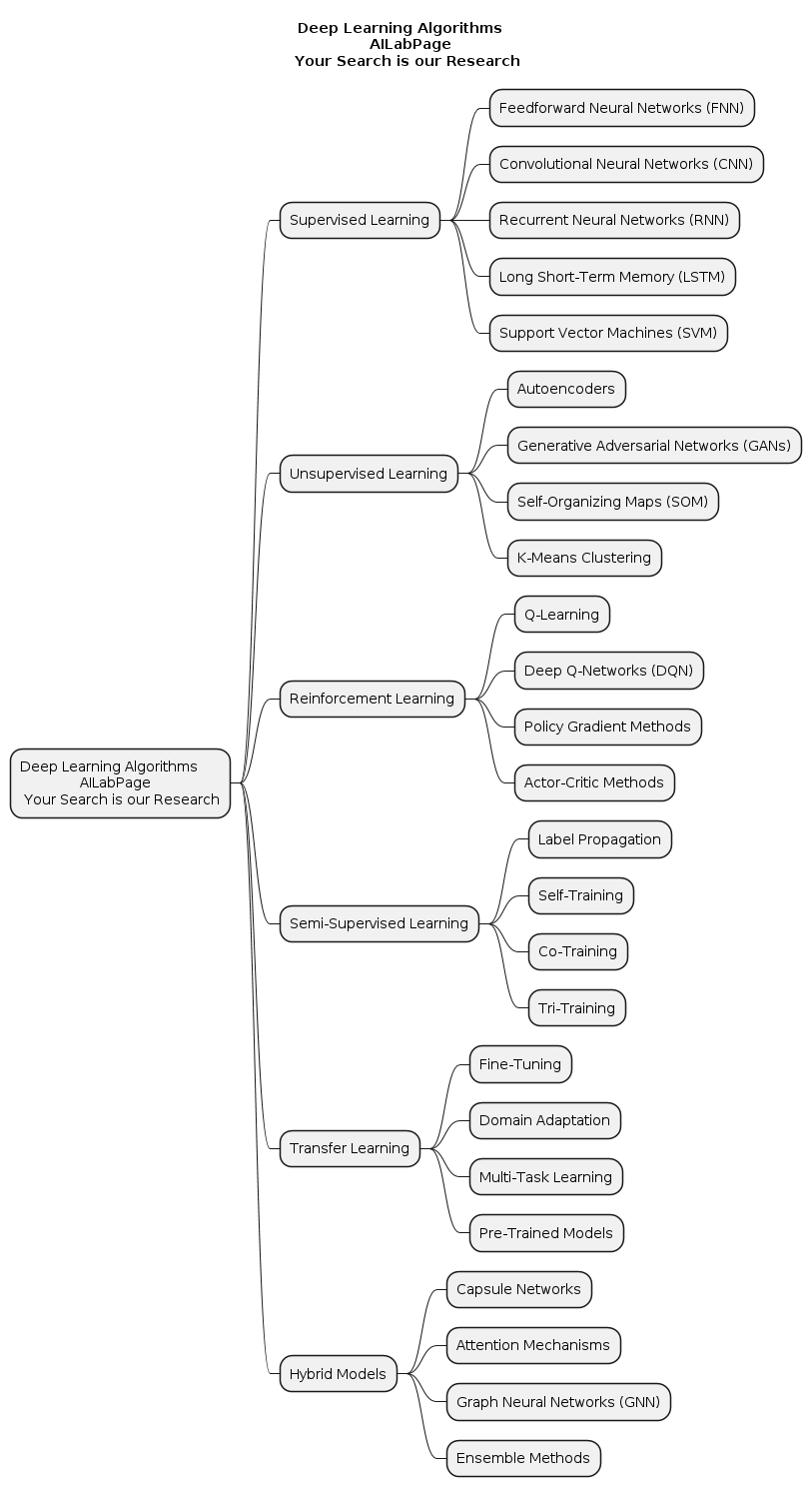

Deep Learning Algorithms

DL a technique for implementing advance machine learning. It was also is known as deep structured learning or hierarchical learning. At the same time, deep learning has absolute power to stand it’s own an independent pillar of AI. In future, DL will divorce its self from Machine Learning (in my opinion). DL is a technique to achieve a goal not necessarily come out of the same goal.

Deep learning’s main drivers are artificial neural networks or neural networks. There are some specialised versions also available for neural networks such as convolution neural networks and recurrent neural networks. These neural networks address special problem domains. Two of the best use cases for Deep Learning which are unique as well are image processing and text/speech processing based on methodologies like deep neural nets.

In practice, deep learning methods, specifically Recurrent Neural Networks (RNN) models are used for complex predictive analytics. Like, share price forecasting which consists of several stages. DL also includes decision tree learning, inductive logic programming, clustering, reinforcement learning, and Bayesian networks, among others.

Deep learning is the first class of algorithms that are highly scalable. The performance just keeps getting better as we feed the algorithms more data. Speech/Text and image processing can make a perfect robot to start with and actions based on triggers. In order to declare it as perfect or best, it has to pass basic 4 tests. Turning test i.e needs to acquire a college degree, needs to work as an employee for at least 20 years and do well to get promotions and meet ASI status.

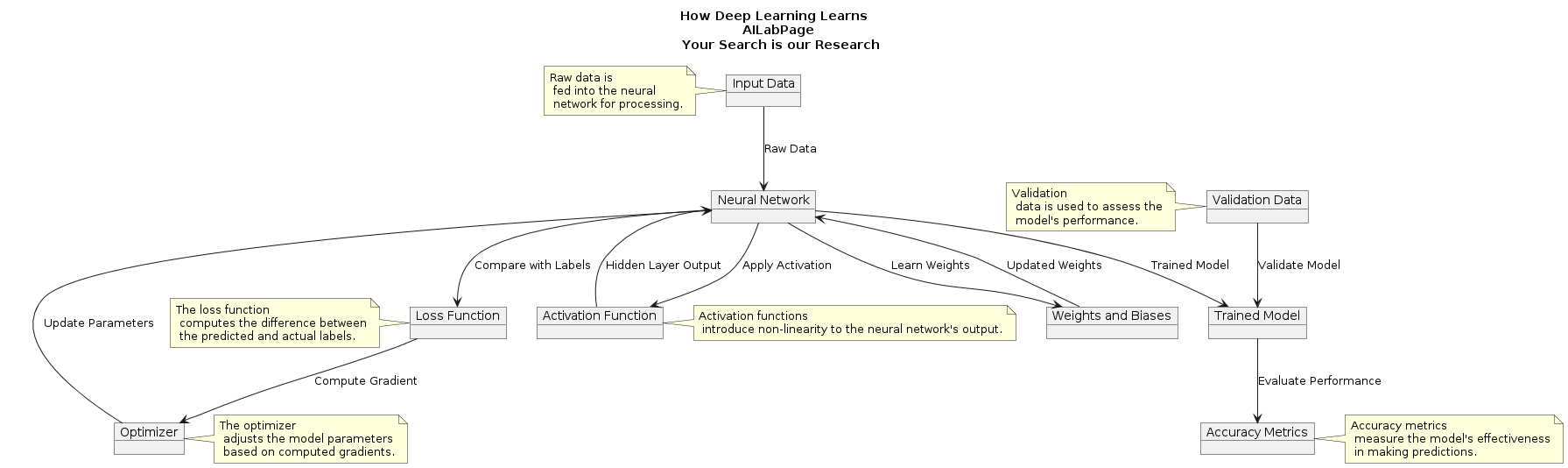

How Deep Learning Learns

The computational models of brain information processing in vision and beyond, have largely shallow architectures performing simple computations. Human brain till date have dominated computational neuroscience and will keep doing so for the next couple of decades.

Deep learning is based on multiple levels of features or representation in each layer with the layers forming a hierarchy of low-level to high-level features. Traditional machine learning focuses on feature engineering but deep learning focuses on end-to-end learning based on raw features.

Deep Learning is a superlative machine learning method as it allows predictive analytics outputs form given a set of inputs with almost zero defect. DL can use supervised and unsupervised learning to train the model. The term “deep” usually refers to the number of hidden layers in the neural network. Traditional neural networks only contain 2-3 hidden layers, while deep networks can have 100 or even more.

Deep learning creates/ train-test splits of the data where ever possible via cross-validation. It loads training data into main memory and computes a model from the training data. Unlike in deep dream which can generate new images, or transform existing images and give them a dreamlike flavour, especially when applied recursively. Deep learning is based on multiple levels of features or representation in each layer with the layers forming a hierarchy of low-level to high-level features.

Deep Learning vs Machine Learning

Deep Learning and Machine Learning are both subsets of artificial intelligence, yet they differ in scope and approach. Machine Learning focuses on algorithms that enable computers to learn from data and make predictions or decisions without being explicitly programmed.

Deep Learning, on the other hand, involves neural networks with multiple layers that can automatically extract features from raw data. While Machine Learning is versatile and suitable for various tasks, Deep Learning excels in tasks requiring complex pattern recognition, such as image and speech recognition. Both have unique strengths and applications, making them essential components of modern AI systems.

Though machine learning has laid down the foundation for deep learning to grow and evolve. At the same time, Deep learning has taken key features from the machine learning model.

Interesting fact & truth here is it takes it to step further by constantly teaching itself new abilities and adjusting existing ones.

- Deep learning is cultured technology with the highest degree of accuracy for self-learning capabilities. This makes the results from this often in near accurate results and faster processing.

- It learns high-level, non-linear features necessary for accurate classification.

- Deep Neural Networks are the first family of algorithms within machine learning that do not need manual feature engineering, rather, they learn on their own by processing and learning the high-level features from raw data.

- Deep neural networks are fed through raw data; which learns to find the object on which it is trained or “being trained”.

This unique type of algorithm (deep learning) has far surpassed any earlier benchmarks for classification of images, text, and voice. It loads all the training data into main memory and computes a model from the training data.

Frequently used jargons in deep learning

In deep learning, mastering the jargon is crucial for understanding and communicating effectively. From convolutional neural networks to backpropagation, this guide demystifies the common terms used in deep learning, empowering enthusiasts and practitioners alike to navigate this dynamic field with confidence.

- Perceptrons – A single layer neural network. Perceptron is a linear classifier. It is used in supervised learning. In this computing structures are based on the design of the human brain and algorithms takes a set of inputs and returns a set of outputs.

- Multilayer Perceptron (MLP)- A Multilayer Perceptron is a Feedforward Neural Network with multiple fully-connected layers that use nonlinear activation functions to deal with data which is not linearly separable.

- Deep Belief Network (DBN) – DBNs is a type of probabilistic graphical model that learn a hierarchical representation of the data in an unsupervised way.

- Deep Dream – A technique invented by Google that tries to distil the knowledge captured by a deep Convolutional Neural Network.

- Deep Reinforcement Learning (DRN) – This is a powerful and exciting area of AI research, with potential applicability to a variety of problem areas. Other common terms under this area are DQN, Deep Deterministic Policy Gradients (DDPG) etc.

- Deep Neural Network (DNN) - A neural network with many hidden layers. There is no hard-coded definition on how many layers least a deep neural network has to have. Usually at least 3 or more.

- Recurrent Neural Networks (RNN) – A neural network to understand the context in speech, text or music. The RNN allows information to loop through the network,

- Convolutional Neural Network (CNN) – A neural networks, to do images recognition, processing and classifications. Objects detection, face recognition etc. are some CNN’s ability where it is widely used.

- Recursive Neural Networks – A hierarchical kind of network where with no time aspect to the input sequence but the input has to be processed hierarchically in a tree fashion.

Delving into the world of deep learning often entails encountering a plethora of specialized terms and concepts. This comprehensive guide elucidates frequently used jargons, offering concise explanations and insights. Whether unraveling the mysteries of activation functions or deciphering the intricacies of recurrent neural networks, this resource equips learners with essential knowledge.

Why Deep Learning

If supremacy is the basis for popularity then surely Deep Learning is almost there (At least for supervised learning tasks). Deep learning attains the highest rank in terms of accuracy when trained with a huge amount of data. Deep learning algorithms take highly unorganised, messy and unlabelled data — such as video, images, audio recordings, and text.

The analogy to deep learning is that the rocket engine is the deep learning models and the fuel is the huge amounts of data we can feed to these algorithms. – Sir Andrew Ng.

There are several advantages of using deep learning over traditional Machine Learning algorithms. Deep learning outdoes if the data size is large. But with small data size, traditional Machine Learning algorithms are preferable.

- Knowing the unknown – DL techniques outshines others and avoid worries of lack of domain understanding for feature introspection or where there is a less understanding about feature engineering.

- Nothing is complex – For complex problems such as image, video, voice recognition or natural language processing, DL works like a charm.

One of the biggest issue/limitations of Deep Learning is it requires high-end machines as opposed to traditional Machine Learning algorithms. GPU’s has become a basic or for granted need to do any Deep Learning related algorithm.

Shortcomings

On the other hand, when it comes to unsupervised learning, research using deep learning yet to see similar behaviour like supervised learning tasks. Responses to valid argument on (if any) “if not deep learning” then why not Hierarchical Temporal Memory (HTM) will cover in upcoming posts.

Conclusion – Deep Learning, in short, is going much beyond machine learning and its algorithms that are either supervised or unsupervised. In DL it uses many layers of nonlinear processing units for feature extraction and transformation. Where traditional machine learning focuses on feature engineering, deep learning focuses on end-to-end learning based on raw features. Traditional deep learning creates/ train-test splits of the data where ever possible via cross-validation. The best part for anyone looking to learn deep learning only needs to have some working knowledge/background of calculus, fundamental algorithms, linear algebra and strong probability theory only. One thing for sure about deep learning is, it teaches anyone a deep understanding of the math behind neural networks and how deep learning libraries work.

—

Books + Other readings Referred

- Research through Open Internet – NewsPortals, Economic development report papers and conferences.

- Internet-based survey results from 30 AI experts

- Open Internet & AILabPage (group of self-taught engineers) members hands-on lab work.

Feedback & Further Question

Do you have any questions about Deep Learning or Machine Learning? Leave a comment or ask your question via email. Will try my best to answer it.

Points to Note:

All credits if any remains on the original contributor only. We have now elaborated our earlier posts on “AI, ML and DL – Demystified” for understanding Deep Learning only. You can find earlier posts on Machine Learning – The Helicopter view, Supervised Machine Learning, Unsupervised Machine Learning and Reinforcement Learning links.

============================ About the Author =======================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter ====================================================================

[…] of the jargon used here includes Machine Learning, Neural Networks, Deep Learning and Data […]

[…] you have any questions about AI, Data Science, Quantum Computing, Deep Learning or Machine Learning? for finTech or information security. Leave a comment or ask your question via […]

[…] you have any questions about AI, Data Science, Quantum Computing, Deep Learning or Machine Learning? for FinTech or information security. Leave a comment or ask your […]

[…] you have any questions about AI, Data Science, Quantum Computing, Deep Learning or Machine Learning? Leave a comment or ask your question via email. Will try my best to answer […]

[…] What is Deep Learning? […]

[…] What is Deep Learning? […]

[…] have any questions about Quantum technologies, Artificial Intelligence and its subdomains like Deep Learning or Machine Learning? etc. Leave a comment or ask your question via email. Will try my best to […]

[…] have any questions about Quantum technologies, Artificial Intelligence and its subdomains like Deep Learning or Machine Learning? etc. Leave a comment or ask your question via email. Will try my best to […]

[…] What is Deep Learning? […]

[…] i.e. Quantum technologies, Artificial Intelligence and its subdomains like Deep Learning or Machine Learning? etc. Leave a comment or ask your question via email. Will try […]

[…] What is Deep Learning? […]

[…] “Neural Networks”, or ANN are inspired by the human brain system though nowhere close to it. Deep learning’s main job is to transform and extract features to establish relationships, the computer trains […]

[…] What is Deep Learning? […]

[…] Deep Learning – Subset of Machine Learning. It is an algorithm that has no theoretical limitations of what it can learn; the more data you give and the more computational time you give, the better it is – Sir Geoffrey Hinton (Google). […]

[…] this post, we will attempt to demystify some use cases of machine learning and deep learning at a high level to make them clear. To name a few industries where these technologies are making a […]

[…] have any questions about Quantum technologies, Artificial Intelligence, and its subdomains like Deep Learning or Machine Learning? etc. Leave a comment or ask your question via email. Will try my best to […]

[…] What is Deep Learning? […]

[…] Transcended : When people talk about Artificial Intelligence (AI), Machine Learning (ML), Deep learning (DL), and Artificial Neural Networks or Neural Networks, they use the words in […]

[…] about Quantum technologies, Artificial Intelligence and its subdomains like Deep Learning or Machine Learning? etc. Leave a comment or ask your question via email. Will try […]

However, building an appropriate DL model is a challenging task, due to the dynamic nature and variations in real-world problems and data. Moreover, the lack of core understanding turns DL methods into black-box machines that hamper development at the standard level. This article presents a structured and comprehensive view of DL techniques including a taxonomy considering various types of real-world tasks like supervised or unsupervised. Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?