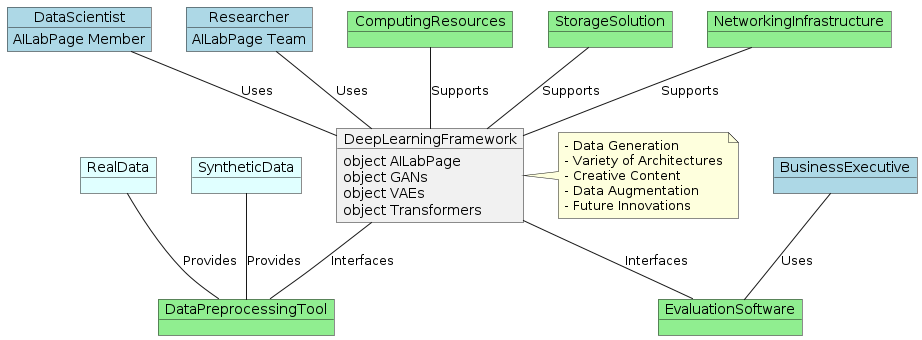

Generative Adversarial Networks (GANs): A combination of two neural networks, which is a very effective generative model network, works simply opposite to others.

The other neural network models usually take complex input and output, but in GANs it’s just the opposite. GANs are a very young family member of Deep Neural Network Architecture. Introduced by Ian Goodfellow and his team at the University of Montreal in 2014. GANs are a class of unsupervised machine learning algorithms.

—

Adversarial training “The most interesting idea in the last 10 years in the field of Machine learning”. Sir Yann LeCun, Facebook’s AI research director

Let’s Unpack This Jargon – GANs Intro

A Generative Adversarial Network is simply another type of neural network architecture used for generative models. In simple words creating samples, similar but do not have some unique differences.

As per the definition of the word “Adversarial” from the Internet, “Involving two people or two sides who oppose each other, i.e., adversary procedures, An adversarial relationship is an adversarial system of justice, with prosecution and defense opposing each other. I wonder why there is a need for the system to generate almost real-looking images when they can be misused more than correctly used. You will find out in detail below:

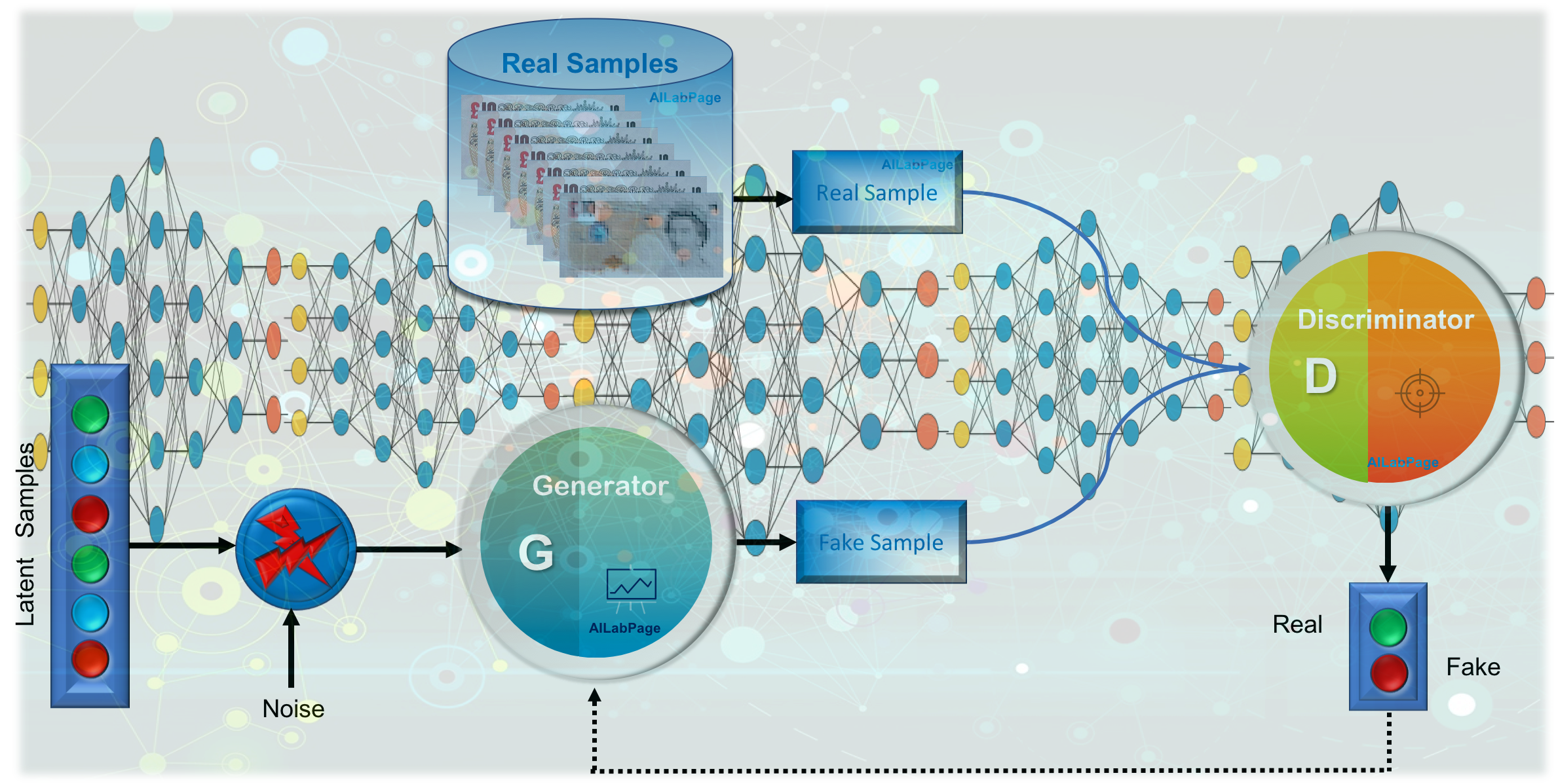

GANs are a class of algorithms used in an unsupervised learning environment. As the name suggests, they are called adversarial networks because they are made up of two competing neural networks. Both networks compete with each other to achieve a zero-sum game. Both neural networks are assigned different job roles, i.e., they compete with each other.

- Neural Network one is called the Generator because it generates new data instances.

- Other neural net is called as Discriminator, evaluates work for the first neural net for authenticity.

The cycle continues to obtain accurate or near-perfect results. To understand GANs, let’s take the scenario from my home: My son is a much better player in chess than me, and If I want to be a better player than I am, I should play with him. If you’re still confused, it’s ok to let me try to give a real-world example as below.

Generative Neural Networks – Solid Bullet Points

In my realm of expertise and personal pursuits, which encompass Theoretical Physics, Deep Learning, Photography, and AI, I hold a profound affinity for GANs and perhaps allocate around 20% of my attention to CNNs. The impact of GANs on my endeavours has been truly remarkable, elevating my cognitive capacities and thought processes, and leading to significant improvements across various facets of my work domains.

- Data Generation: GNNs specialize in creating novel and realistic data instances across various domains, including images, text, and music, by learning and mimicking patterns from existing data.

- Variety of Architectures: GNNs encompass various architectures like Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformers, each tailored for specific generative tasks, such as image synthesis, style transfer, and text generation.

- Creative Content: GNNs empower creative applications by producing original artwork, generating realistic images, composing music, and even crafting engaging storytelling through text generation, showcasing their potential for artistic expression.

- Data Augmentation: GNNs contribute to data augmentation in machine learning by generating additional training data, thereby enhancing model robustness and performance, particularly beneficial in scenarios with limited labeled data.

- Future Innovations: As a forefront technology, GNNs continue to evolve, paving the way for advancements in AI-driven content creation, virtual reality experiences, and personalized recommendations, reshaping how we interact with and consume digital content.

GNNs continue to evolve, and their impact on various industries and domains is poised to be profound, unlocking new avenues for creativity and advancing the boundaries of artificial intelligence. The journey of GNNs is a testament to the power of machine learning in unleashing my ingenuity and pushing the boundaries of what’s possible in the digital realm.

Looking into GAN Realm

In the ethereal realm of GANs, the analogy of above example mirrors the synergy between generator and discriminator, each refining the other through adversarial collaboration. Just as in chess, where each move refines your understanding and strategy, GANs harness adversarial forces to sculpt data into astonishingly authentic creations. Your journey from apprentice to adversary mirrors the profound metamorphosis in GANs, shaping the contours of innovation through the crucible of competition and enlightenment.

The Generator

The generator network’s job is to create random synthetic outputs, i.e., images, pictures, etc. This neural network is called the Generator because it generates new data instances.

The Discriminator

The discriminator tries to identify and make efforts to inform whether the input is real or fake. The discriminator evaluates work for the first neural net for authenticity.

—

How do GANs Work?

As mentioned and described in the image above, GANs consist of two neural networks, i.e., a generator that generates a fake image of our currency note example and a discriminator that classifies it as real or fake. The generator’s role is to map the input to the desired data space (as in the example above). On the other hand, the second neural network model, i.e., the discriminator, classifies the output with probability as real or fake compared with real datasets.

The whole idea of the game is to face off with each other (two neural networks) and get better at each attempt. The end result is expected, as our generator network produces realistic or almost realistic outputs. The discriminator gets fully trained to perform its job, which is to correctly classify the input data as either real or fake. This informs the weights used to get updated and marks the probabilities as

- Maximised – Any real data input is now classified as “Belongs to the real dataset”

- Minimised – Any fake data input is now classified as “Belongs to the real dataset”

Generators get trained to fool the discriminator by generating data as close to real as possible. So at this step, the generator’s weights used to get updated and marked the probabilities as

- Maximised – Any fake data input is now classified as “Belongs to the real dataset”

At the end of several training iterations, a conclusion is drawn about whether the Generator and Discriminator have reached a point where no further improvement can be made. This is the time when the generator generates (fake) realistic synthetic data and the discriminator fails to differentiate between fake and real. So with the above two scenarios, it’s clear that during training, loss functions get optimized in opposite directions. A similar situation to our example where both parties feel they are at their best.

Steps Involved in Train GANs

Step 1: Problem definition: The goal needs to be very clear, i.e., whether to generate fake video by feeding live video images or fake text. One needs to be very clear, as you won’t discover anything.

Step 2: Setting up and defining architecture: Define this step needs to be understood and defined with the problem and need in mind. Whether the architecture for the model would be convolutional neural networks or just simple multilayer perceptrons for the generator and the discriminator.

Step 3: Discriminator training with real data For example, we will feed real currency images (using convolutional neural networks), as that’s what we want to generate. To train the discriminator to correctly predict them as real.

Step 4: Discriminator training with fake inputs Collect the generated data and let the discriminator correctly predict it as fake.

Step 5: Generator training with the output of the discriminator Now that the discriminator is trained, you can get its predictions and use them as an objective for training the generator. Train the generator to fool the discriminator.

Step 6: Loop Iteration 3 to step 5

Steps 7 & 8: Checking and validating: Fake data manually for performance. Make the decision to continue training or stop and do a performance check.

Example

Let’s term “Two Neural Networks“, that compete as advisors and opponents in GANs.

I am the “generator,” and my son is the “discriminator,” who is much more powerful than me in the game of chess.

If I played continuously with him, then my game would improve for sure. On the back end of this scenario, I would analyze what I did wrong and what he did right. Further, I need to think of a strategy that will help me beat him in the next game.

From the above example, it’s very clear that In the realm of Generative Adversarial Networks (GANs), envision the dynamic interplay of two neural networks cast as opponents and advisors.

Analysing our Example

I assume the role of the “generator,” while my son embodies the “discriminator,” possessing formidable prowess in the chess arena. An ongoing chess match between the two of us becomes a crucible for skill enhancement. Through persistent engagement, my strategic acumen evolves, informed by my son’s astute judgments.

I need to repeat my strategy with continuous improvement and learning from each attempt until he (my son) gets defeated. If this entire concept gets programmed and incorporated into my data models (strategy), In short, I can say that to get better at my game (generator), I need to be more clever and learn more from my powerful opponent (discriminator). Though I never want to win against him, which is not the case in GANs.

As the “generator,” my moves unfold with ingenuity, seeking to outmaneuver my adept “discriminator” counterpart. The games serve as a canvas for learning; each match is a vivid stroke revealing new possibilities and insights. The post-match analysis becomes a ritual, a crucial facet of my growth. Scrutinizing my moves and studying my son’s perceptive choices unveils the anatomy of strategic brilliance.

This iterative process fuels my evolution as a player. Every misstep metamorphoses into a stepping stone, a beacon illuminating areas for improvement. The realm of possibilities widens as I harness the wisdom embedded in each game, driving me to devise innovative tactics.

Yet, my aspiration transcends mere emulation. As I delve into the nuances of my son’s strategic choices, an overarching strategy takes shape. It’s a symphony of moves, orchestrated with finesse, poised to outwit my formidable “discriminator” in the impending duel. I synthesize the strategic fragments, crafting a multidimensional approach that carries the potential to disrupt patterns, capitalize on vulnerabilities, and engineer checkmate.

The fusion of my commitment, adaptive learning, physics and mathematical section of my brain, and strategic ingenuity weaves a narrative of growth. With every move, I get an inch closer to the horizon of triumph, where the scales tip in my favour. The embodiment of perseverance, I prepare to challenge my son’s supremacy armed with a refined strategy, unfazed by prior setbacks

Enhancing Chess Game (Between Me and My Son) Strategy Through GANs

Training a GAN inspired by the chess analogy involves iterative steps to improve the “generator’s” game against the powerful “discriminator.” Please note that I have mentioned all 10 steps below, which I have used in my above example, but I have not defined all of them in code or in detail for free subscriptions. A sample code is attached below in picture format. You can use the below steps and sample code to build your own end-to-end game plan; trust me, it’s very easy. Here are 10 steps to train the GAN in this context.

- Initialization: Initialize the “generator” and “discriminator” networks with their respective parameters and architectures.

- Generate Moves: Let the “generator” produce a set of chess moves as its output. These moves represent the “generator’s” attempt at improving its game.

- Evaluate Moves: Use the “discriminator” to evaluate the quality of the generated moves. The “discriminator” acts as a judge, assigning scores to the moves based on their perceived quality.

- Feedback Loop: Based on the “discriminator’s” evaluations, the “generator” receives feedback on the quality of its generated moves. The feedback could be in the form of scores or labels indicating good or bad moves.

- Strategy Update: The “generator” adjusts its strategy based on the feedback received from the “discriminator.” It learns from its mistakes and attempts to generate better moves in response.

- Analysis of Mistakes: Analyze the specific mistakes made by the “generator” during the move generation process. Identify patterns or weaknesses that the “discriminator” detected.

- Improve Strategy: The “generator” strategizes to overcome the detected weaknesses. It aims to create moves that exploit the “discriminator’s” identified areas of strength.

- Re-Generate Moves: With the updated strategy, the “generator” produces a new set of chess moves, incorporating the lessons learned from the previous feedback.

- Continuous Iteration: Repeat steps 3 to 8 for multiple iterations. The “generator” progressively refines its strategy by learning from its own mistakes and the “discriminator’s” evaluations.

- Convergence: Over successive iterations, the “generator’s” moves become more sophisticated and challenging for the “discriminator” to evaluate. The goal is to achieve a convergence where the “generator” produces high-quality moves that outwit the “discriminator.”

Remember that the above steps and below sample code template provide a conceptual framework for training the GAN based on my chess game analogy. The actual implementation of neural networks, move generation, evaluation, and strategy updates would involve translating these concepts into code using appropriate algorithms and libraries. Additionally, GANs often involve more complex architectures and training techniques, so further research and experimentation would be necessary for a practical implementation.

Code for the Above Example – Chess Game (Me and My Son)

Implementing a GAN in Rust for the scenario for the above example is a bit complex due to the nature of GANs and the chess game analogy. However, here I am documenting a high-level outline of how anyone might structure a simplified version of above concept in Rust

A

The above code is just a glimpse and a simplified outline thus lacks the complete intricacies of a full GAN implementation. In this scenario, the Generator generates chess moves, and the Discriminator evaluates the quality of those moves. The Generator then adjusts its strategy based on the feedback from the Discriminator.

Complexity such as training mechanisms, neural network architectures, and more sophisticated strategies are missing on purpose and not available for a free subscription. Please be noted and study GANs and Rust programming further to build a more comprehensive and functional implementation.

Challenges with GANs

GANs have their main focus on the computer vision domain as part of their area of attention in the field of research and practical applications.

- GANs are more unstable compared to other neural networks and very difficult to train.

- GAN’s nature of destabilisation it never converge and because of this non-convergence problem, the model oscillate with its parameters this cause overfitting,

- To train two networks we need to use only single backpropagation thus this makes difficult to choose the correct objective.

- The generator often collapses and produce limited sample variations

- The discriminator gets to its pinnacle too fast and causes the generator gradient to vanish thus may not learn anything.

- Extremely sensitive to the hyper-parameter selections.

The most interesting use case of GANs is to generate sequences of video and images in order to predict sequences of a video frame or gif file.

Use Cases – Generative Adversarial Networks

Generative adversarial networks have several real-life business use cases, few of them are listed below:

- Detection of counterfeit currency

- Making original fake artwork samples.

- Simulation and planning using time-series data to be used in videos or audios

Learn From the Creator – Generative Adversarial Networks

Must watch videos

Conclusion: In this post, we have learnt some high-level basics of GANs- Generative Adversarial Networks. GANs are recent development efforts but look very promising and effective for a much real-life business use case. In the intricate fusion of Generative Adversarial Networks and the chessboard analogy, a symphony of innovation unfolds. This dual narrative resonates with the boundless potential of adversarial collaboration, illuminating the transformative power of synergy and creativity in both artificial intelligence and the human pursuit of mastery.

—

Points to Note:

All credits if any remains on the original contributor only. We have covered all basics around the Generative Neural Network. Though often such tasks struggle to find the best companion between CNNs and RNNs algorithms to look for information.

Books + Other material Referred

- Research through open internet, news portals, white papers and imparted knowledge via live conferences & lectures.

- Lab and hands-on experience of @AILabPage (Self-taught learners group) members.

- NIPS 2016 Tutorial: GANs

- Generative Adversarial Networks

- Unsupervised Representation Learning with Deep Convolutional GAN

Feedback & Further Question

Do you need more details or have any questions on topics such as technology (including conventional architecture, machine learning, and deep learning), advanced data analysis (such as data science or big data), blockchain, theoretical physics, or photography? Please feel free to ask your question either by leaving a comment or by sending us an via email. I will do my utmost to offer a response that meets your needs and expectations.

======================== About the Author ===================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter

============================================================

[…] Generative Adversarial Networks […]

[…] Semi-supervised Learning: This type of ml i.e. semi-supervised algorithms are the best candidates for the model building in the absence of labels for some data. So if data is mix of labels and non-labels then this can be the answer. Typically a small amount of labeled data with a large amount of unlabelled data is used here. […]

[…] Generative Adversarial Networks […]

[…] Generative Adversarial Networks […]

[…] data, computing power and algorithms to look for information. In the previous post we covered Generative Adversarial Networks. A family of artificial neural […]

[…] data, computing power and algorithms to look for information. In the previous post we covered Generative Adversarial Networks. A family of artificial neural […]

[…] Generative Adversarial Networks […]

[…] to look for information. How machine can do more then just translation this is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] https://vinodsblog.com/2018/11/23/generative-adversarial-networks-gans-the-basics-you-need-to-know/?… […]

[…] In the upcoming post, we will cover new type machine learning task under neural network Generative Adversarial Networks. A family of artificial neural […]

[…] computing power and algorithms to look for information. In the upcoming post, we will talk about Generative Adversarial Networks. A family of artificial neural networks which a threat and blessing to the physical currency […]

[…] computing power and algorithms to look for information. In the upcoming post, we will talk about Generative Adversarial Networks. A family of artificial neural networks which a threat and blessing to the physical currency […]

[…] in 2014. GANs are a class of unsupervised machine learning algorithm. In our previous post, “Deep Learning – Introduction to GANs”. I introduced the basic analogy, concept, and ideas behind “How GANs work”. This post […]

[…] computing power and algorithms to look for information. In the upcoming post, we will talk about Generative Adversarial Networks. A family of artificial neural networks which a threat and blessing to the physical currency […]

[…] data, computing power and algorithms to look for information. In the previous post, we covered Generative Adversarial Networks. A family of artificial neural […]

[…] to look for information. How machine can do more than just translation this is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] to look for information. How machine can do more than just translation this is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] data, computing power and algorithms to look for information. In the previous post, we covered Generative Adversarial Networks. A family of artificial neural […]

[…] to obtain accuracy or near perfection results. Still confused, it’s ok to read this post on “Generative Adversarial Networks“; you will find more details and […]

[…] Read More […]

[…] Read More Generative Adversarial Networks (GANs) – Combination of two neural networks which is a very effective generative model network, works simply opposite to others. The other neural network models take… […]

[…] Read More Generative Adversarial Networks (GANs) – Combination of two neural networks which is a very effective generative model network, works simply opposite to others. The other neural network models take… […]

[…] to look for information. How machine can do more than just translation this is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] Generative Adversarial Networks […]

[…] Generative Adversarial Networks […]

[…] to look for information. How machine can do more than just translation this is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] power of systems and algorithms to look for information. How machines can do more is covered in Generative Adversarial Networks. A family of artificial neural […]

[…] Generative Adversarial Networks […]

[…] NextDeep Learning – Introduction to Generative Adversarial Neural Networks […]

[…] computing power and algorithms to look for information. In the upcoming post, we will talk about Generative Adversarial Networks. A family of artificial neural networks which a threat and blessing to the physical currency […]

[…] computing power and algorithms to look for information. In the upcoming post, we will talk about Generative Adversarial Networks. A family of artificial neural networks which a threat and blessing to the physical currency […]

[…] data, computing power and algorithms to look for information. In the previous post, we covered Generative Adversarial Networks. A family of artificial neural […]

[…] computing power, and algorithms to look for information. In the upcoming post, we will talk about generative adversarial networks. A family of artificial neural networks that are both a threat and a blessing to the physical […]

[…] Generative Adversarial Networks […]

[…] programs are called Convolutional neural networks (CNNs), Recurrent neural networks (RNNs), and Generative adversarial networks (GANs). These networks are made to use the different types of information and ways of […]

You can use of example of generator creating fake currency where the discriminator cannot find whether the fake currency is real or fake after a period of time

That assertion may not be entirely accurate, as reputable agencies typically employ legitimate discriminators that rely on accurate data for training. While your desired outcome might be attainable, it could potentially lead to a self-contained misrepresentation.

Many thanks for such an excellent work. I am happy to get paid subscription of your site, I am very much into GNN and I agree 100% on your writeup here. I like the most this part “Generative Neural Networks (GNNs) are innovative AI models that excel at creating new data instances by learning the underlying patterns and structures from existing data. GNNs have applications in image synthesis, text generation, and other creative domains.”

[…] the realm of music, Generative Adversarial Networks have orchestrated a symphony of innovation. These networks can learn the nuances of musical […]

[…] Generative Adversarial Networks […]

[…] Generative Adversarial Networks […]